Today is the 20th anniversary of the first commit to the public Django repository!

Ten years ago we threw a multi-day 10th birthday party for Django back in its birthtown of Lawrence, Kansas. As a personal celebration of the 20th, I'm revisiting the talk I gave at that event and writing it up here.

Here's the YouTube video. Below is a full transcript, plus my slides and some present-day annotations.

Django Origins (and some things I have built with Django)

Presented 11th July 2015 at Django Birthday in Lawrence, Kansas

My original talk title, as you'll see on your programs, was "Some Things I've Built with Django." But then I realized that we're here in the birthplace of Django, celebrating the 10th birthday of the framework, and nobody's told the origin story yet. So, I've switched things around a little bit. I'm going to talk about the origin story of Django, and then if I have time, I'll do the self-indulgent bit and talk about some of the projects I've shipped since then.

I think Jacob's introduction hit on something I've never really realized about myself. I do love shipping things. The follow-up and the long-term thing I'm not quite so strong on. And that came to focus when I was putting together this talk and realized that basically every project I'm going to show you, I had to dig out of the Internet Archive.

Ten years on from writing this talk I'm proud that I've managed to overcome my weakness in following-up - I'm now actively maintaining a bewildering array of projects, having finally figured out how to maintain things in addition to creating them!

But that said, I will tell you the origin story of Django.

#

For me, the story starts very much like Jacob's. I was reading RSS feeds back in 2003, and I saw this entry on Adrian's blog, talking about a job opportunity for a web programmer or developer in Lawrence, Kansas.

Now, I was in England. I was at university. But my university had just given me the opportunity to take a year abroad, to take a year out to do an internship year in industry. My girlfriend at the time was off to Germany to do her year in industry. So I was like, well, you know, do I stay at university? And then this comes along.

So I got in touch with Adrian and said, you know, could this work as a year-long internship instead? And he was reading my blog and I was reading his blog, and we knew that we aligned on a bunch of things. So we thought we'd give it a go.

Now, if you look through this job ad, you'll see that this is all about expert knowledge of PHP and experience designing and maintaining databases, particularly MySQL. So this was a PHP and MySQL gig.

But when I arrived in Kansas, we quickly realized that we were both kind of over PHP. You know, we'd both built substantial systems in PHP, and we were running up against the limits of what you can do in PHP and have your code still be clean and maintainable.

And at the same time, we were both reading Mark Pilgrim's blog (archive link). Mark Pilgrim had been publishing Dive into Python and making a really strong case for why Python was a great web language.

So we decided that this was the thing we wanted to do. But we both had very strong opinions about how you should build websites. Things like URL design matters, and we care about the difference between get and post, and we want to use this new thing called CSS to lay out our web pages. And none of the existing Python web frameworks really seemed to let us do what we wanted to do.

#

Now, before I talk more about that, I'll back up and talk about the organization we're working for, the Lawrence Journal World.

David gave a great introduction to why this is an interesting organization. Now, we're talking about a newspaper with a circulation of about 10,000, like a tiny newspaper, but with a world-class newsroom, huge amounts of money being funneled into it, and like employing full-time software developers to work at a local newspaper in Kansas.

#

And part of what was going on here was this guy. This is Rob Curley. He's been mentioned a few times before already.

#

And yeah, Rob Curley set this unofficial mission statement that we "build cool shit". This is something that Adrian would certainly never say. It's not really something I'd say. But this is Rob through and through. He was a fantastic showman.

And this was really the appeal of coming out to Lawrence, seeing the stuff they'd already built and the ambitions they had.

#

This is Lawrence.com. This is actually the Lawrence.com written in PHP that Adrian had built as the sole programmer at the Lawrence Journal World. And you should check this out. Like, even today, this is the best local entertainment website I have ever seen. This has everything that is happening in the town of Lawrence, Kansas population, 150,000 people. Every gig, every venue, all of the stuff that's going on.

And it was all written in PHP. And it was a very clean PHP code base, but it was really stretching the limits of what it's sensible to do using PHP 4 back in 2003.

So we had this goal when we started using Python. We wanted to eventually rebuild Lawrence.com using Python. But in order to get there, we had to first build -- we didn't even know it was a web framework. We called it the CMS.

#

And so when we started working on Django, the first thing that we shipped was actually this website. We had a lot of the six-news Lawrence. This is the six-news Lawrence -- six-news is the TV channel here -- six-news Lawrence weather page.

And I think this is pretty cool. So Dan Cox, the designer, was a fantastic illustrator. We actually have this illustration of the famous Lawrence skyline with each panel could be displayed with different weather conditions depending on the weather.

And in case you're not from Kansas, you might not have realized that the weather is a big deal here. You know, you have never seen more excited weathermen than when there's a tornado warning and they get to go on local news 24 hours a day giving people updates.

#

So we put the site live first. This was the first ever Django website. We then did the rest of the 6 News Lawrence website.

And this -- Adrian reminded me this morning -- the launch of this was actually delayed by a week because the most important feature on the website, which is clearly the photograph of the news people who are on TV, they didn't like their hairdos. They literally told us we couldn't launch the website until they'd had their hair redone, had the headshots retaken, had a new image put together. But, you know, image is important for these things.

So anyway, we did that. We did six-news Lawrence. And by the end of my year in Kansas, Adrian had rewritten all of Lawrence.com as well.

#

So this is the Lawrence.com powered by Django. And one thing I think is interesting about this is when you talk to like David Heinemeier Hansson about Rails, he'll tell you that Rails is a framework that was extracted from Basecamp. They built Basecamp and then they pulled out the framework that they used and open sourced it.

I see Django the other way around. Django is a framework that was built up to create Lawrence.com. Lawrence.com already existed. So we knew what the web framework needed to be able to do. And we just kept on iterating on Django or the CMS until it was ready to produce this site here.

#

And for me, the moment I realized that we were onto something special was actually when we built this thing. This is a classic Rob Curley project. So Rob was the boss. He had the crazy ideas and he didn't care how you implemented them. He just wanted this thing done.

And he came to us one day and said, you know, the kids' little league season is about to start. Like kids playing softball or baseball. Whatever the American kids with bats thing is. So he said, kids' little league season is about to start. And we are going to go all out.

#

I want to treat these kids like they're the New York Yankees. We're going to have player profiles and schedules and photos and results.

#

And, you know, we're going to have the ability for parents to get SMS notifications whenever their kid scores.

#

And we're going to have 360 degree, like, interactive photos of all of the pitches in Lawrence, Kansas, that these kids are playing games on.

They actually did send a couple of interns out with a rig to take 360 degree virtual panoramas of Fenway Park and Lawrence High School and all of these different places.

#

And he said -- and it starts in three days. You've got three days to put this all together.

And we pulled it off because Django, even at that very early stage, had all of the primitives you needed to build 360 degree interactives. That was all down to the interns. But we had all of the pieces we needed to pull this together.

#

So when we were working on it back then, we called it the CMS.

#

A few years ago, Jacob found a wiki page with some of the names that were being brainstormed for the open source release. And some of these are great. There's Brazos -- I don't know where that came from -- Webbing, Physique, Anson.

#

This is my favorite name. I think this is what I proposed -- is the Tornado Publishing System.

#

And the reason is that I was a really big fan of Office Space. And if we had the Tornado, we could produce TPS reports, which I thought would be amazing.

But unfortunately, this being Kansas, the association of Tornadoes isn't actually a positive one.

Private Dancer, Physgig, Lavalia, Pithy -- yeah. I'm very, very pleased that they picked the name that they did.

#

So one of our philosophies was build cool shit. The other philosophy we had was what we called "Wouldn't it be cool if?"

So there were no user stories or careful specs or anything. We'd all sit around in the basement and then somebody would go "Wouldn't it be cool if...", and they'd say something. And if we thought it was a cool idea, we'd build it and we'd ship it that day.

#

And my favorite example of "Wouldn't it be cool if?" -- this is a classic Adrian one -- is "Wouldn't it be cool if the downloads page on Lawrence.com featured MP3s you could download of local bands?" And seeing as we've also got the schedule of when the bands are playing, why don't we feature the audio from bands who you can go and see that week?

So this page will say, "OK Jones are playing on Thursday at the Bottleneck. Get their MP3. Listen to the radio station." We had a little MP3 widget in there. Go and look at their band profile. All of this stuff.

Really, these kinds of features are what you get when you take 1970s relational database technology and use it to power websites, which -- back in 2003, in the news industry -- still felt incredibly cutting edge. But, you know, it worked.

And that philosophy followed me through the rest of my career, which is sometimes a good idea and often means that you're left maintaining features that seemed like a good idea at the time and quickly become a massive pain!

#

After I finished my internship, I finished my degree in England and then ended up joining up with Yahoo. I was actually working out of the Yahoo UK office but for a R&D team in the States. I was there for about a year and a half.

One of the things I learned is that you should never go and work for an R&D team, because the problem with R&D teams is you never ship. I was there for a year and a half and I basically have nothing to show for it in terms of actual shipped features.

We built some very cool prototypes. And actually, after I left, one of the projects I worked on, Yahoo FireEagle, did end up getting spun out and turned into a real product.

#

But there is one project -- the first project I built at Yahoo using Django that I wanted to demonstrate. This was for Yahoo's internal hack day. And so Tom Coates and myself, who were working together, we decided that we were going to build a mashup, because it was 2005 and mashups were the cutting edge of computer science.

So we figured, OK, let's take the two most unlikely Yahoo products and combine them together and see what happens. My original suggestion was that we take Yahoo Dating and Yahoo Pets. But I was told that actually there was this thing called Dogster and this other thing called Catster, which already existed and did exactly that.

So the next best thing, we went for Yahoo News and Yahoo Horoscopes. And what we ended up building -- and again, this is the first Django application within Yahoo -- was Yahoo Astronewsology.

And the idea was you take the news feed from Yahoo News, you pull out anything that looks like it's a celebrity's name, look up their birth date, use that to look up their horoscope, and then combine them on the page.

And in a massive stroke of luck, we built this the week that Dick Cheney shot his friend in the face while out hunting.

Dick Cheney's horoscope for that week says, "A very close friend who means a great deal to you has found it necessary to go out of their way to tick you off. You're not just angry, you're furious. Before you let go and let them have it, be sure you're right. Feeling righteous is far better than feeling guilty."

And so if Dick Cheney had only had only been reading his horoscopes, maybe that whole situation would have ended very differently.

#

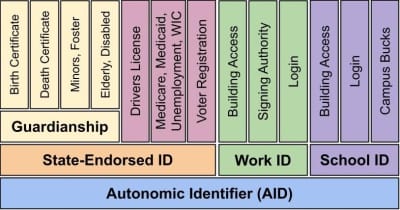

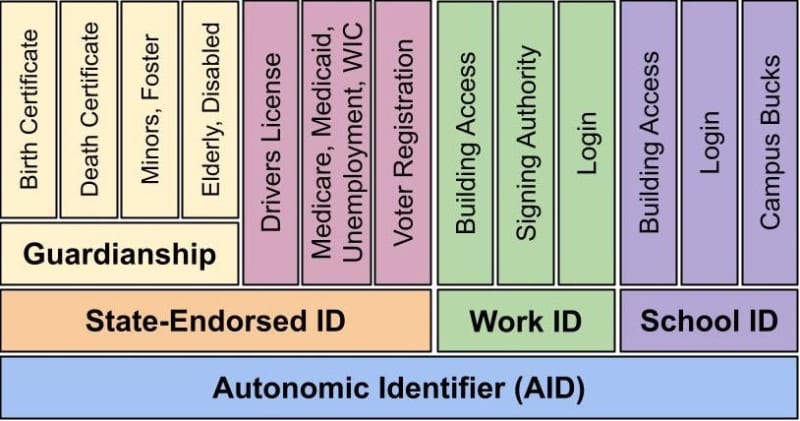

So after Yahoo, I spent a while doing consulting and things, mainly around OpenID because I was determined to make OpenID work. I was absolutely convinced that if OpenID didn't take off, just one company would end up owning single sign-on for the entire internet, and that would be a total disaster.

And with hindsight, it didn't quite happen. Facebook login looked like it was going to do that a few years ago, but these days there's enough variety out there that I don't feel like we all have to submit to our Facebook masters.

But, you know, I was enjoying freelancing and consulting and so on. And then I ended up going for coffee with somebody who worked for The Guardian.

I'm sure you've all heard of The Guardian. It's one of England's most internationally focused newspapers. It's a very fine publication. And I realized that I really missed working in a newsroom environment. And I was incredibly jealous of people like Adrian, who'd gone off to the Washington Post and was doing data journalism there, and Derek Willis as well, who bounced from the Post and The New York Times. There was all of this cool data journalism stuff going on.

And The Guardian's pitch was basically, we've been building a CMS from scratch in Java with a giant team of engineers, and we've built it and it's really cool, but we're not shipping things quickly. We want to start exploring this idea of building things much faster to fit in with the news cycle.

And that was a very, very tempting thing for me to get involved with. So I went to work for The Guardian.

#

And The Guardian have a really interesting way of doing onboarding of new staff. The way they do it is they set you up on coffee dates with people from all over the organization. So one day you'll be having coffee with somebody who sells ads, and the next day it'll be the deputy editor of the newsroom, and the next day it'll be a journalist somewhere. And each of these people will talk to you and then they'll suggest other people for you to meet up with. So over the first few weeks that you're there, you meet a huge variety of people.

And time and time again, as I was talking to people, they were saying, "You know what? You should go and talk to Simon Rogers, this journalist in the newsroom."

This is Simon Rogers. I went down to talk to him, and we had this fascinating conversation. So Simon is a journalist. He worked in the newsroom, and his speciality was gathering data for The Guardian's infographics. Because they are in the paper. They post, they have graphs and charts and all sorts of things like that that they publish.

It turns out that Simon was the journalist who knew how to get that data out of basically any source you can imagine. If you wanted data, he would make some phone calls, dig into some government contacts and things, and he'd get those raw numbers. And all of the other journalists thought he was a bit weird, because he liked hanging out and editing Excel spreadsheets and stuff.

So I said to him halfway through this conversation, "Just out of interest, what do you do with those Excel spreadsheets?" And he's like, "Oh, I keep them all on my hard drive." And showed me this folder with hundreds and hundreds of meticulously researched, properly citable news quality spreadsheets full of data about everything you could imagine. And they lived on his hard drive and nowhere else.

And I was like, "Have you ever talked to anyone in the engineering department upstairs?" And we made this connection.

And so from then on, we had this collaboration going where he would get data and he'd funnel it to me and see if we could, see if I or someone else in the engineering department at Guardian could do something fun with it.

#

And so that was some of the most rewarding work of my career, because it's journalism, you know, it's news, it's stuff that matters. The deadlines are ridiculous. If a news story breaks and it takes you three weeks to turn around a piece of data journalism around it, why did you even bother? And it's perfect for applying Django to.

So the first story I got to work on at the Guardian was actually one of the early WikiLeaks things. This is before WikiLeaks was like massively high profile. But quite early on, WikiLeaks leaked a list of all of the members of the British National Party, basically the British Nazis. They leaked a list of all of their names and addresses.

And the Guardian is an ethical newspaper, so we're not going to just publish 18,000 people's names and addresses. But we wanted to figure out if there was something we could do that would make use of that data but wouldn't be violating anyone's individual privacy.

And so what we did is we took all of the addresses, geocoded them, figured out which parliamentary constituency they lived in, and used that to generate a heat map that's actually called a choropleth map, I think, of the UK showing where the hotspots of BNP activity were.

And this works because in the UK a parliamentary constituency is, they're designed to all have around about the same population. So if you just like make the color denser for the larger numbers of BNP members, you get this really interesting heat map of the country.

#

And what was really cool about this is that I did this using SVG, because we have an infographics department with Illustrator who are good at working with SVG. And it's very easy with an SVG file with the right class names on things to set colors on different regions.

And because we produced it in SVG, we could then hand it over to the print department, and the next day it was out in the paper. It was like a printed thing on paper, on like dead trees distributed all over the country, which I thought was super cool.

So that was the first data journalism project that we did at The Guardian. And it really helped prove that given the right data sets and like the right tools and a bit of freedom, you can do some really cool things.

The first few times I did this, I did it by hand. Then we had The Guardian's first hack day and I was like, well okay, I'm going to build a little self-service tool for our infographics journalists to like dump in a bunch of CSV numbers and get one of these maps out of it.

So I built this tool. I didn't have anywhere official to deploy it, so I just ran it on my Linux desktop underneath my desk. And they started using it and putting things in the paper and I kind of forgot about it. And every now and then I get a little feature request.

A few years after I left The Guardian, I ran into someone who worked there. And he was like, yeah, you know that thing that you built? So we had to keep your desktop running for six months after you left. And then we had to like convert it into a VMware instance. And as far as I know, my desktop is still running as a VMware instance somewhere in The Guardian.

Which ties into the Simon database, I guess. The hard thing is building stuff is easy. Keeping it going it turns out is surprisingly difficult.

#

This was my favorite project at The Guardian. There was a scandal in the UK a few years ago where it turned out that UK members of parliament had all been fiddling their expenses.

And actually the background on this is that they're the lowest paid MPs anywhere in Europe. And it seems like the culture had become that you become an MP and on your first day somebody takes you aside and goes, look, I know the salary is terrible. But here's how to fill your expenses and make up for it.

This was a scandal that was brewing for several years. The Guardian had actually filed freedom of information requests to try and get these expense reports. Because they were pretty sure something dodgy was going on. The government had dragged their heels in releasing the documents.

And then just when they were a month before they finally released the documents, a rival newspaper, the Telegraph, managed to get hold of a leaked copy of all of these expenses. And so the Telegraph had 30 days lead on all of the other newspapers to dig through and try and find the dirt.

So when they did release the expenses 30 days later, we had a race on our hands because we needed to analyze 20,000 odd pages of documents. Actually, here it says 450,000 pages of documents in order to try and find anything left that was newsworthy.

#

And so we tackled this with crowdsourcing. We stuck up a website. We told people, we told Guardian readers, come to this website, hit the button, we'll show you a random page from someone's expenses. And then you can tell us if you think it's not interesting, interesting, or we should seek an investigative reporter on it.

#

And one of the smartest things we did with this is we added a feature where you could put in your postcode, we'd figure out who your MP was, and then we would show you their smug press photo. You know, their smug face next to all of their expense claims that they'd filed.

And this was incredibly effective. People were like, "Ooh, you look so smug. I'm going to get you." And once we put this up, and within 18 hours, our community had burned through hundreds of thousands of pages of expense documents trying to find this stuff.

#

And again, this was built in Django. We had, I think, five days warning that these documents are coming out. And so it was a total, like, I think I built a proof of concept on day one. That was enough to show that it was possible. So I got a team with a designer and a couple of other people to help out. And we had it ready to go when the document dump came out on that Friday.

And it was pretty successful. We dug up some pretty interesting stories from it. And it was also just a fantastic interactive way of engaging our community. And, you know, the whole crowdsourcing side of it was super fun.

So I guess the thing I've learned from that is that, oh, my goodness, it's fun working for newspapers. And actually, if you -- the Lawrence Journal world, sadly, no longer has its own technology team. But there was a period a few years ago where they were doing some cracking data journalism work. Things like tracking what the University of Kansas had been using its private jet for, and letting people explore the data around that and so on.

The other thing we did at the Guardian, this is going back to Simon Rogers, is he had all of these spreadsheets on his hard drive. And we're like, okay, we should really try and publish this stuff as raw data. Because living on your hard drive under your head is a crying shame.

And the idea we came up with was essentially to start something we called the Data blog and publish them as Google spreadsheets. You know, we spent a while thinking, well, you know, what's the best format to publish these things in? And we're like, well, they're in Excel. Google spreadsheets exists and it's pretty good. Let's just put a few of them up as Google sheets and see what people do with them.

And it turns out that was enough to build this really fun community of data nerds around the Guardian's data blog who would build their own visualizations. They'd dig into the data. And it meant that we could get all sorts of -- like, we could get so much extra value from the work that we were already doing to gather these numbers for the newspaper. That stuff was super fun.

#

Now, while I was working at the Guardian, I also got into the habit of building some projects with my girlfriend at the time, now my wife Natalie. So Natalie and I have skill sets that fit together very nicely. She's a front-end web developer. I do back-end Django stuff. I do just enough ops to be dangerous. And so between the two of us, we can build websites.

#

The first things we worked on together is a site which I think some people here should be familiar with, called Django People. The idea was just, you know, the Django community appears to be quite big now. Let's try and get people to stick a pin on a map and tell us where they are.

Django People still exists today. It's online thanks to a large number of people constantly bugging me at Django Cons and saying, look, just give us the code and the data and we'll get it set up somewhere so it can continue to work. And that's great. I'm really glad I did that because this is the one project that I'm showing you today which is still available on the web somewhere. (2025 update: the site is no longer online.)

But Django People was really fun. And the thing we learned from this, my wife and I, is that we can work together really well on things.

#

The other side project we did was much more of a collaborative effort. Again, this no longer exists, or at least it's no longer up on the web. And I'm deeply sad about this because it's my favorite thing I'm going to show you.

But before I show you the project, I'll show you how we built it. We were at a BarCamp in London with a bunch of our new friends and somebody was showing photographs of this Napoleonic sea fortress that they had rented out for the weekend from an organization in the UK called the Landmark Trust, who basically take historic buildings and turn them into vacation rentals as part of the work to restore them.

And we were like, "Oh, wouldn't it be funny if we rented a castle for a week and all of us went out there and we built stuff together?" And then we were like, "That wouldn't be funny. That would be freaking amazing."

So we rented this place. This is called Fort Clonque. It's in the Channel Islands, halfway between England and France. And I think it cost something like $2,000 for the week, but you split that between a dozen people and it's like youth hostel prices to stay in a freaking fortress.

#

So we got a bunch of people together and we went out there and we just spent a week. We called it /dev/fort. We spent a week just building something together.

#

And the thing we ended up building was called Wildlife Near You. And what Wildlife Near You does is it solves the eternal question, "Where is my nearest llama?"

#

#

Once again, this is a crowdsourcing system. The idea is that you go to wildlifenearyou.com and you've just been on a trip to like a nature park or a zoo or something. And so you create a trip report saying, "I went to the Red Kite feeding station and I saw a common raven and a common buzzard and a red kite." And you import any of your photos from Flickr and so forth.

#

And you build up this profile saying, "Here are all the places I've been and my favorite animals and things I've seen."

#

And then once we've got that data set, we can solve the problem. You can say, "Search for llamas near Brighton." And it'll say, "Your nearest llama is 18 miles away and it'll show you pictures of llamas and all of the llama things."

#

And we have species pages. So here's the red panda page. 17 people love red pandas. You can see them at Taronga Zoo.

#

And then our most viral feature was we had all of these photos of red pandas, but how do we know which is the best photo of a red panda that we should highlight on the red panda page? So we basically built Hot or Not for photographs of wildlife.

So it's like, "Which marmot photo is better?" And you say, "Well, clearly the one on the right." And it's like, "Okay, which skunk photo is better?"

I was looking at the logs and people would go through hundreds and hundreds of photos. And you'd get scores and you can see, "Oh, wow, my marmot photo is the second best marmot photo on the whole website."

#

So that was really fun. And then we eventually took it a step further and said, "Okay, well, this is really fun, but this is a website that you have to type on, right?" And meanwhile, mobile phones are now getting HTML5 geolocation and stuff. So can we go a step further?

So we built owlsnearyou.com. And what owlsnearyou.com does is you type in the location, and it says, "Your nearest owl is 49 miles away." It's a spectacle owl at London Zoo. It was spotted one year ago by Natalie.

#

And if you went here on a mobile phone-- If you went here on a device that supported geolocation, it doesn't even ask you where you live. It's just like, "Oh, okay, here's your nearest owl."

And I think we shipped lions near you and monkeys near you and a couple of other domains, but owlsnearyou.com was always my favorite.

So looking at this now, we should really get this stuff up and running again. It was freaking amazing. Like, this for me is the killer app of all killer apps.

(We did eventually bring this idea back as www.owlsnearme.com, using data from iNaturalist - that's online today.)

#

So there have actually been a bunch of Devforts since then. One of the things we learned from Devfort is that building applications-- If you want to do a side project, doing one with user accounts and logins and so on, it's a freaking nightmare. It actually took us almost a year after we finished on the fort to finally ship Wildlife Near You because there were so many complexities. And then we had to moderate it and keep an eye on it and so on.

So if you look at the more recent Devforts, they've taken that to heart. And now they try and ship things which just work and don't require ongoing users logging in and all of that kind of rubbish.

But one of the other projects I wanted to show you that came out of a Devfort was something called Bugle. And the idea of Bugle is Bugle is a Twitter-like application for groups of hackers collaborating in a castle, fort, or other defensive structure who don't have an internet connection.

#

This was basically to deal with Twitter withdrawal when we were all on the fort together and we had an internal network. So Bugle, looking at it now, we could have been Slack! We could have been valued at $2 billion.

Yeah, Bugle is like an internal Twitter clone with a bunch of extra features like it's got a paste bin and to-do lists and all sorts of stuff like that.

#

And does anyone here know Ben Firshman? I think quite a few people do. Excellent. So Ben Firshman was out on a Devfort and I did a "Wouldn't it be cool if" on him. I said, "Wouldn't it be cool if all of our Twitter apps and our phones talked to Bugle instead on the network?"

#

And so if you go and look on GitHub, I bet this doesn't work anymore. But he did add magic Twitter support where you could run a local DNS server, redirect Twitter to Bugle and we cloned, he cloned enough of the Twitter API that like Twitter apps would work and it would be able to Bugle instead.

We wanted to do a Devfort in America. You don't really have castles and forts that you can rent for the most part. If anyone knows of one, please come and talk to me because there's a distinct lack of defensible structures at least of the kind that we are used to back in Europe.

#

So I'm running out of time, but that's OK because the most recent project, Lanyrd, is something which most people here have probably encountered.

I will tell a little bit of the backstory of Lanyrd because it's kind of fun.

#

Lanyrd was a honeymoon project.

Natalie and I got married. The wildlife near you influence affected our wedding - it was a freaking awesome wedding! You know, in England, you can get a man with a golden eagle and a barn owl and various other birds to show up for about $400 for the day. And then you get to take photos like this.

#

So anyway, we got married, we quit our jobs, I had to leave the Guardian because we wanted to spend the next year or two of our lives just traveling around the world, doing freelancing work on our laptops and so on.

We got as far as Morocco, we were six months in, when we contracted food poisoning in Casablanca and we were too sick to keep on travelling, so we figured we needed to like, you know, and it was also Ramadan, so it was really hard to get food and stuff. So we rented an apartment for two weeks and said, "Okay, well, since we're stuck for two weeks, let's like finish that side project we've been talking about and ship it and see if anyone's interested."

#

So we shipped Lanyrd, which was built around the idea of helping people who use Twitter find conferences and events to go to. What we hadn't realised is that if you build something around Twitter, especially back in 2010, it instantly goes viral amongst people who use Twitter.

So that ended up cutting our honeymoon short, and we actually applied for Y Combinator from Egypt and ended up spending three years building a startup and like hiring people and doing that whole thing.

(Natalie wrote more about our startup in Lanyrd: from idea to exit - the story of our startup.)

The only thing I'll say about that is everything in the... Startups have to give the impression that everything's super easy and fun and cool all the time, because people say, "How's your startup going?" And the only correct answer is, "Oh man, it's amazing. It's doing so well." Because everyone has to lie about the misery, pain, anguish and stress that's happening behind the scenes.

So it was a very interesting three years, and we built some cool stuff and we learnt a lot, and I don't regret it, but do not take startups lightly.

#

So a year and a half ago, we ended up selling Lanyrd to Eventbrite and moving out to San Francisco. And at Eventbrite, I've been mostly on the management team building side of things, but occasionally managing to sneak some code out as well.

#

The one thing I want to show you from Eventbrite, because I really want to open source this thing, is again at Hack Day, we built a tool called the Tikibar, which is essentially like the Django debug toolbar, but it's designed to be run in production. Because the really tough things to debug don't happen in your dev environment. They happen in production when you're hitting a hundred million row database or whatever.

And so the Tikibar is designed to add as little overhead as possible, but to still give you detailed timelines of SQL queries that are executing and service calls and all of that kind of stuff. It's called the Tikibar because I really like Tikibars.

#

And the best feature is if a page takes over 500 milliseconds to load, the eyes on the Tiki God glow red in disapproval at you.

If anyone wants a demo of that, come and talk to me. I would love to get a few more instrumentation hooks into Django to make this stuff easier.

(The Tikibar was eventually open sourced as eventbrite/tikibar on GitHub.)

#

This has been a whistle-stop tour of the highlights of my career working with Django.

And actually, in putting this presentation together, I realized that really it's that Rob Curley influence from all the way back in 2003. The reason I love Django is it makes it really easy to build cool shit and to ship it. And, you know, swearing aside, I think that's a reasonable moral to take away from this.

Colophon

I put this annotated version of my 10 year old talk together using a few different tools.

I fetched the audio from YouTube using yt-dlp:

yt-dlp -x --audio-format mp3 \

"https://youtube.com/watch?v=wqii_iX0RTs"

I then ran the mp3 through MacWhisper to generate an initial transcript. I cleaned that up by pasting it into Claude Opus 4 with this prompt:

Take this audio transcript of a talk and clean it up very slightly - I want paragraph breaks and tiny edits like removing ums or "sort of" or things like that, but other than that the content should be exactly as presented.

I converted a PDF of the slides into a JPEG per page using this command (found with the llm-cmd plugin):

pdftoppm -jpeg -jpegopt quality=70 django-birthday.pdf django-birthday

Then I used my annotated presentations tool (described here) to combine the slides and transcript, making minor edits and adding links using Markdown in that interface.

Tags: adrian-holovaty, devfort, django, history, jacob-kaplan-moss, lawrence, lawrence-com, lawrence-journal-world, python, my-talks, the-guardian, annotated-talks